Hi all,

Even though I have passed my Google’s Tensorflow Developer Certification around April last year, the demands of my work have pulled me away from coding and creating models, which is a shame as I was hoping to expand my skillset in the area.

Therefore, I have decided to spend my free time finally attempting the Kaggle competition with the first one being the house price prediction regression task, which has already given me a broad experience of preprocessing approaches, from null imputations, transforming categorical features to numerical ones using OHE, Frequency encoding, transforming year based features into age, etc.

You can read about this in my public repo here

I have focused on doing what I know, so I started with a neural network, which of course is not the best model type for the problem given small size of the dataset for example. Nonetheless it allowed me to prepare a robust pipeline that can then be used to replace the model with a more fitting decision tree for example.

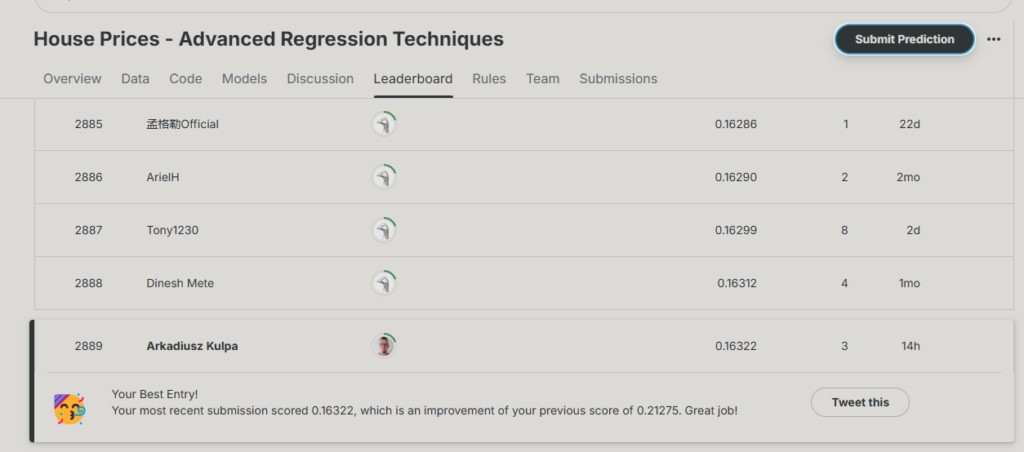

My first competition submission resulted in place 3530 on the leaderboard!

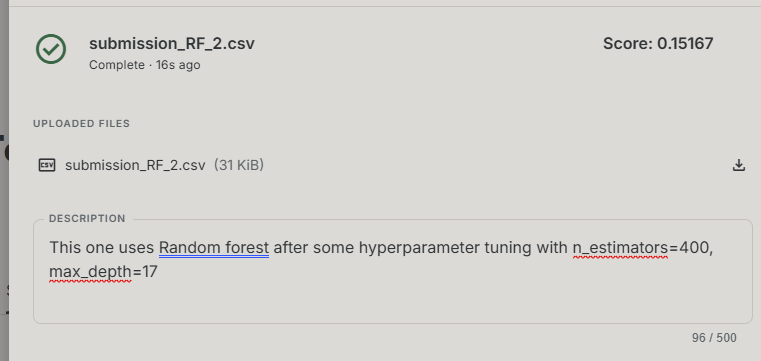

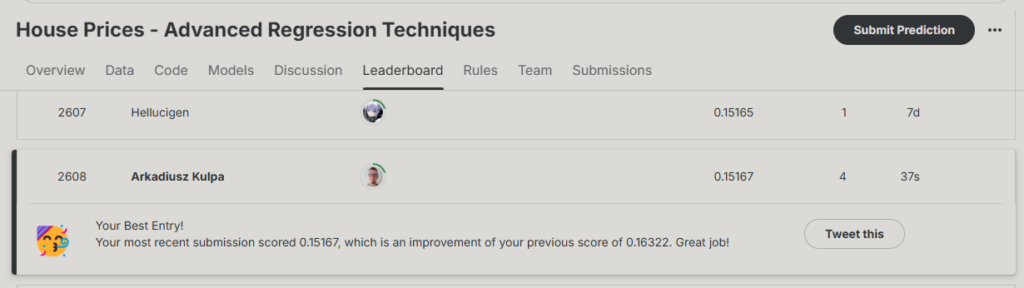

I have since iterated and used a decision tree and a random forest which scored slightly better, with continuous iterations resulting in place 2608 with a score of 0.15

Update 24.03.25

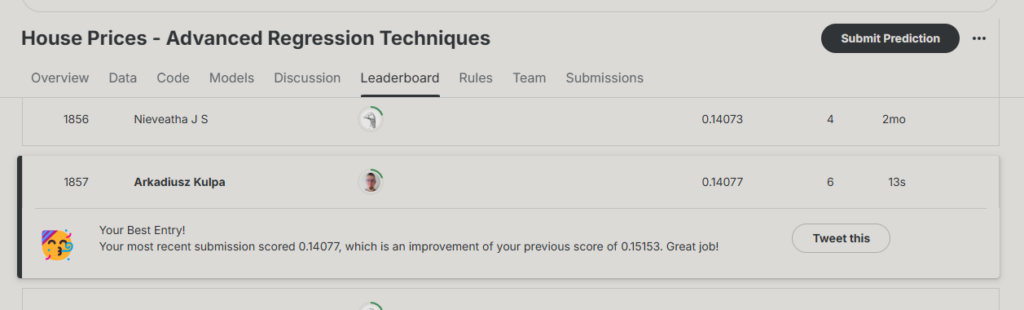

It is surprising how many leaderboard places (800) can be shaved with a single change (XGBoost + early stopping) and a small bump on the test score (0.14077 vs 0.15167):