Excel Based Test Schedule with Azure Dev Ops APIs

As part of my Integration Tester / Package Lead role at EQUINITI I developed an automated Excel based Test Schedule that would automatically fetch updates from Azure Dev Ops using APIs to get update on our test progress.

This was necessary because Azure Dev Ops (ADO) was note setup for our functional testing efforts and the management quickly reverted to Excel. When I joined they used a basic excel spreadsheet that had to be manually updated with numbers from Azure Dev Ops by simply going from tab to tab and copying numbers down.

I started working on automating this process and in a few months time we started implementing an automated version. As we know big organisations do not like change, especially when it’s quick, which is why an automated excel spreadsheet allows for reporting to be viewed in a familiar format, while saving countless hours on data preparation as it can be uniformly fetched from ADO and preprocessed for analytics.

The Test Schedule is not being used across the entire project by multiple Test Leads and I actively manage the version, training and troubleshooting.

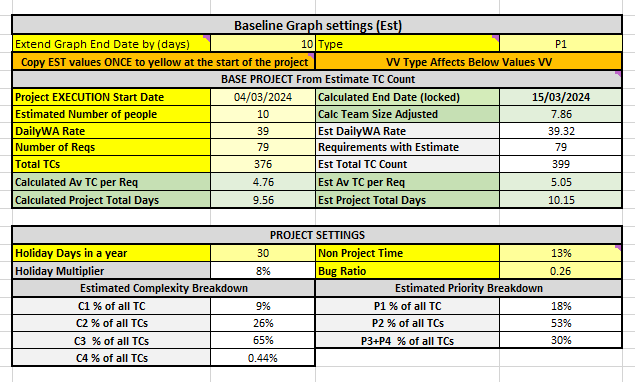

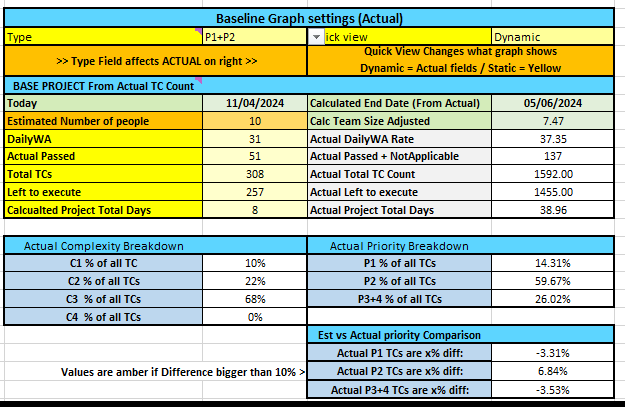

The test schedule is complimented by a python script that allows for the most compute-intensive calculations which were necessary to generate a forecast baseline. This baseline and the end date it provides was key for reporting to stakeholders how the project is performing, which meant my calculations’ formula had to be perfect.

I intend to make a more detailed tutorial of how I made this spreadsheet as i found that many of the solutions I implemented there were not easy to find on the web, for one because of the niche that this problem falls into.

Some of the challenges included:

- How to link the Test Case ID which we had, with the Test Run ID which was hidden away in a different area of ADO (this was accomplished using APIs)

- How to create a mechanics whereby the entire history for a given Test plan can be downloaded

- How to make this download repeatable but only for a segment of the data to avoid having to redownload massive amounts of api data (this was achieved using query updates in Power Query that required tricking the query into updating itself but not overwriting existing data)

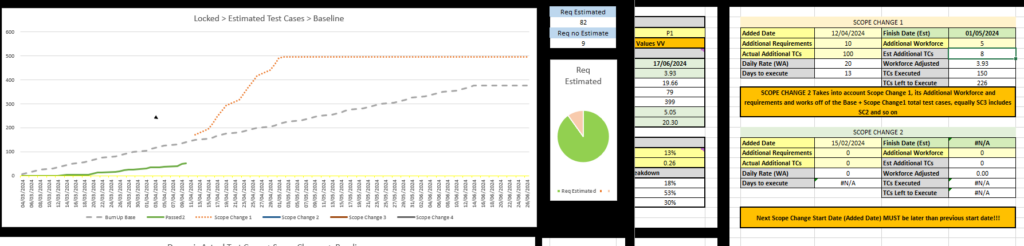

- How to create a dynamic baseline that would autogenerate an excel line graph whenever data is entered for a dynamic set of date ranges that would update with cell inputs

- How to calculate Outcomes for a set of test cases based on hit by hit history that was available from ADO APIs, by turning it into a day by day outcome report using Python.

- And many more hurdles

A new baseline prediction can be generated with a few settings changed in cells nearby to generate a hypothetical scope changes to the baseline should we add more people even during the project

The Test Schedule needs an up to date Outcome value (latest) in order to work out the forecast from today’s situation (e.g. 50 passed, 200 total, 150 left to go, 2 people working, each can do 5 TCs a day, so they can do 150 TC in 150 / (2×5) = 15 work days, etc.)

However the usual BurnUp also should show a graph line made up of past updates, e.g. how many Passed Outcomes were there on each day for the last month. This previously required updating the test schedule manually every day by copying the Passed number from ADO along with other numbers such as Blocked, Failed, Not Applicable.

In this automated version of the test schedule it was impossible to automate this aspect of the process within Excel, due to the number of calculations and iterative nature of them, which was why Python standalone executable was developed by myself as solution to this.

The ADO Analyzer takes in the Test Schedule as input and outputs another .csv spreadsheet with a table that can then be pasted into the Test Schedule that will generate the Outcome statuses (or Outcome history for each day of the project).

The Git Repo is publicly available here. The program uses the Test run history downloaded from ADO into the Test Schedule, which is a step by step record of runs that have been performed on the project to amend statuses of test cases chronologically and save the outcome status for a given day when it moves on to the next day.